Preventing Extremist Violence Using Existing Content Moderation Tools

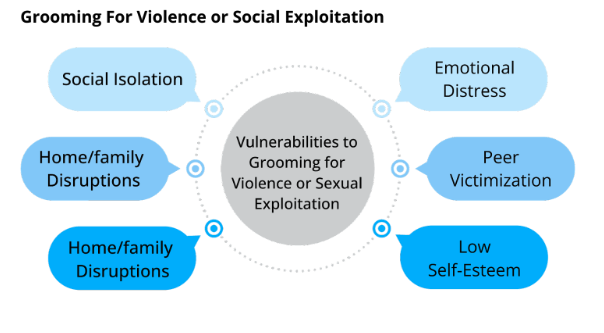

Accurate content moderation can save lives by acting as an early warning system about the risks of offline extremist violence and removing the fuel that incites it. With the explosion in automated content moderation approaches, any number of widely accessible automated detection tools can be used on known violent extremist user-generated content to improve a platform’s detection methods through fine-tuning and customization. Multiple AI-based approaches to detection can identify users, conversations, and communities, signaling a high likelihood of extremist violence. With better real-time detection, platforms can be empowered to break up harmful and criminal communities, helping to damage online influence processes that lead to extremist violence.